Underdog Overdrive

First, a PSA:

In keeping with my apparent ongoing role as The Guy Who Keeps Getting Asked to Talk About Subjects In Which He Has No Expertise (and for those of you who didn’t see the Facebook post), The Atlantic solicited from me a piece on Conscious AI a few months back. The field is moving so fast that’s it’s probably completely out of date by now, but a few days ago they put it out anyway. (Apologies for the lack of profanity therein. Apparently they have these things called “journalistic standards”.)

And Now, Our Feature Presentation:

I am dripping with sweat as I type this. The BUG says I am as red as a cooked lobster, which is telling in light of the fact that the game I have been playing is the only VR game in my collection that you play sitting down. It should be a game for couch potatoes, and I’m sweating as hard as if I’d just done 7k on the treadmill. And I still haven’t even made it out of the Killbox. I haven’t even got into the city of New Brakka yet, and until I can get into that EM-shielded so-called Last Free City, the vengeful-nanny AI known as Big Sys is gonna keep hacking into my brother’s brain until it’s nothing but a protein slushy.

The game is Underdogs, the new release from One Hamsa, and it has surprisingly deep lore for a title that consists mainly of robots punching each other in the face. I know—even though I’ve encountered very little of it in my playthroughs so far—because I wrote a lot of it. After a quarter-century of intermittent gigs in the video game business, on titles ranging from Freemium to Triple-A, this is the first time I’ve had a hand in a game that’s actually made it to market (unless you want to count Crysis 2—which, set in 2022, is now a period piece— and for which I only wrote the novelization based on Richard Morgan’s script.)

Underdogs is a weird indie chimera: part graphic novel, part tabletop role-play, part Roguelike mech battle. It’s that last element that’s the throbbing, face-pounding heart of the artifact of course, the reason you play in the first place. You climb into your mech and hurtle into the KillBox and it’s only after an hour of intense metal-smashing physics that you realize you’re completely out of breath and your headset is soaked like a dishrag. The other elements are mere connective tissue. Combat happens at night; during daylight hours you’re hustling for upgrades and add-ons (stealing’s always an option, albeit a risky one), negotiating hacks and sabotage for the coming match, getting into junkyard fights over usable salvage. Sometimes you find something in the rubble that boosts your odds in the ring; sometimes the guy hired to fix your mech fucks up, leaves the machine in worse shape than he found it, and buggers off with your tools. Sometimes you spend the day desperately trying to find parts to fix last night’s damage so you won’t be going into the ring tonight with a cracked cockpit bubble and one arm missing.

All this interstitial stuff is presented in a kind of interactive 2.5D graphic novel format; the outcomes of street fights or shoplifting gambits are decided via automated dice roll. Dialog unspools via text bubble; economic transactions, via icons and menus. All very stylized, very leisurely. Take your time. Weigh your purchase options carefully. Breathe long, calm, breaths. Gather your strength. You’re gonna need it soon enough.

Because when you enter the arena, it’s bone-crunching 3D all the way.

Mech battles are a cliché of course, from Evangelion to Pacific Rim. But they’re also the perfect format for first-person VR, a conceit that seamlessly resolves one of the biggest problems of the virtual experience. Try punching something in VR. Swing at an enemy with a sword, bash them with a battle-axe. See the problem? No matter how good the physics engine, no matter how smooth the graphics, you don’t feel anything. Maybe a bit of haptic vibration if your controllers are set for it— but that hardly replicates the actual impact of steel on bone, fist in face. In VR, all your enemies are weightless.

In a mech, though, you wouldn’t feel any of that stuff first-hand anyway. You climb into the cockpit and wrap your fingers around the controllers for those giant robot arms outside the bubble; lo and behold, you can feel that in VR too, because here in meatspace you’re actually grabbing real controllers! Now, lift your hands. Bring them down. Smash them together. Watch your arms here in the cockpit; revel in the way those giant mechanical waldos outside mimic their every movement. One Hamsa has built their game around a format in which the haptics of the game and the haptics of the real thing overlap almost completely. It feels satisfying, it feels intuitive. It feels right.

(They’ve done this before. Their first game, RacketNX, is hyperdimensional racquetball in space: you stand in the center of a honeycomb-geodesic sphere suspended low over a roiling sun, or a ringed planet, or a black hole. The sphere’s hexagonal tiles are festooned with everything from energy boosters to wormholes to the moving segments of worm-like entities (in a level called “Shai-Hulud”). You use a tractor-beam-equipped racket to whap a chrome ball against those tiles. But the underlying genius of RacketNX lay not in glorious eye-candy nor the inventive and ever-changing nature of the arena’s tiles, but in the simple fact that the player stands on a small platform in the middle of the sphere; you can spin and jump and swing, but you do not move from that central location. With that one brilliant conceit, the devs didn’t just sidestep the endemic VR motion sickness that results from the eyes saying I’m moving while the inner ears say I’m standing still; it actually built that sidestep into the format of the game, made it an intrinsic part of the scenario rather than some kind of arbitrary invisible wall. They do something similar in Underdogs, turning a limitation of the tech into a seamless part of the world.)

As I said, I haven’t got out of the Killbox yet. I’ve made it far enough to fight the KillBox champion a couple of times [late-breaking update: numerous times!], but he keeps handing my ass to me. [Even more late-breaking update: I have vanquished the Killbox champion. Many times now. Now it’s the next arena where I keep getting squashed like a bug.) This may be partly because I’m old. It probably has more to do with the fact that when you die in this game you go all the way back to square one, and have to go through those first five days of fighting all over again (Roguelike games are permadeath by definition; no candy-ass save-on-demand option here). That’s not nearly as repetitive as you might think, though. Thanks to the scavenging, haggling, and backroom deals cut between matches, your mech is widely customizable from match to match. Your hands can consist of claws, wrecking balls, pile-drivers, blades and buzz-saws. Any combination thereof. You can fortify your armor or amp your speed or add stun-gun capability to your strikes. I haven’t come close to exploring the various configurations you can bring into the arena, the changes you can make between matches. And while you’re only fighting robots up until the championship bout with your first actual mech opponent, there’s a fair variety of robots to be fought: roaches and junkyard dogs and weird sparking tetrapods flickering with blue lightning. Little green bombs on legs that scuttle around and try to blow themselves up next to you. And it only adds to the challenge when one wall of the arena slides back to reveal rows of grinding metal teeth ready to shred you if you tip the wrong way.

Apparently there are four arenas total (so far; the game certainly has expansion potential). Apparently the plot takes a serious turn after the second. I’ll find out eventually. If you’ve got that far, please: no spoilers.

*

But I mentioned the Lore.

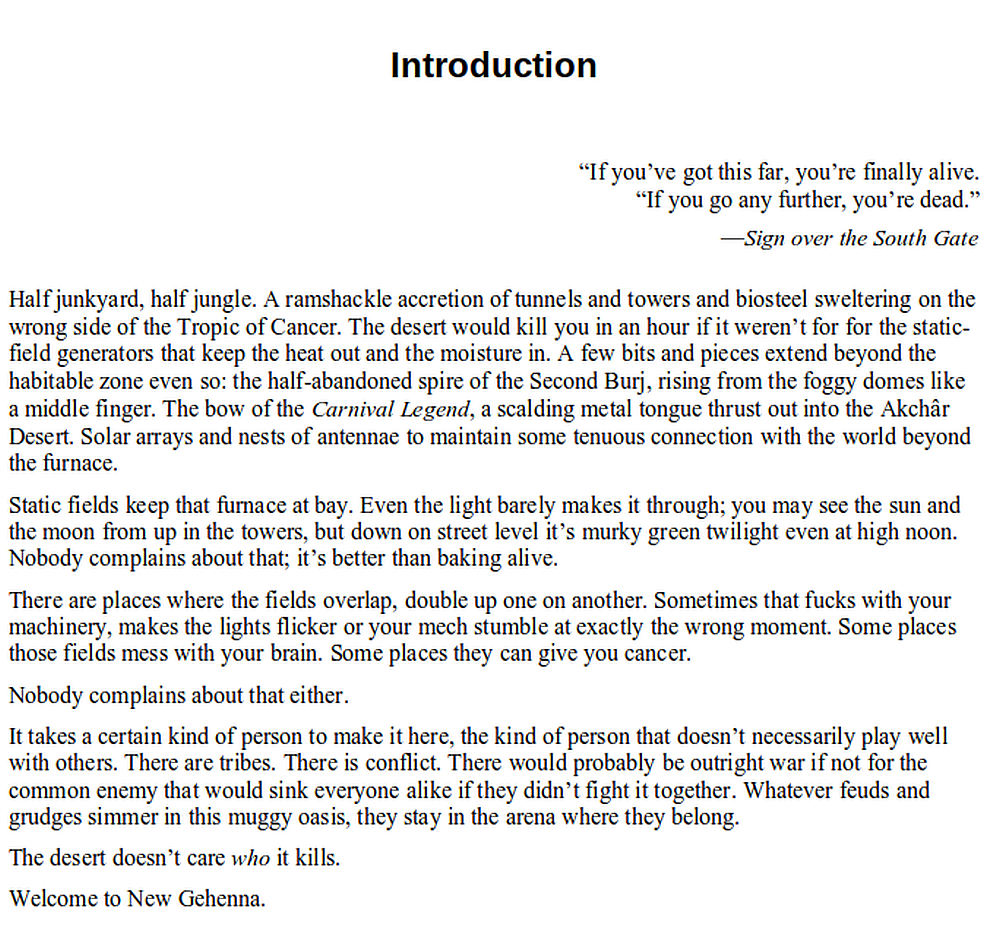

They brought me in to help with that. They already had the basic premise: Humanity, in its final abrogation of responsibility, has given itself over to an AI nanny called Big Sys who watches over all like a kindly Zuckerborg. There’s only one place on earth where Big Sys doesn’t reach, the “Last Free City”; an anarchistic free-for-all where artists and malcontents and criminals— basically, anyone who can’t live in a nanny state— end up. They do mech fighting there.

My job was to flesh all that stuff out into a world.

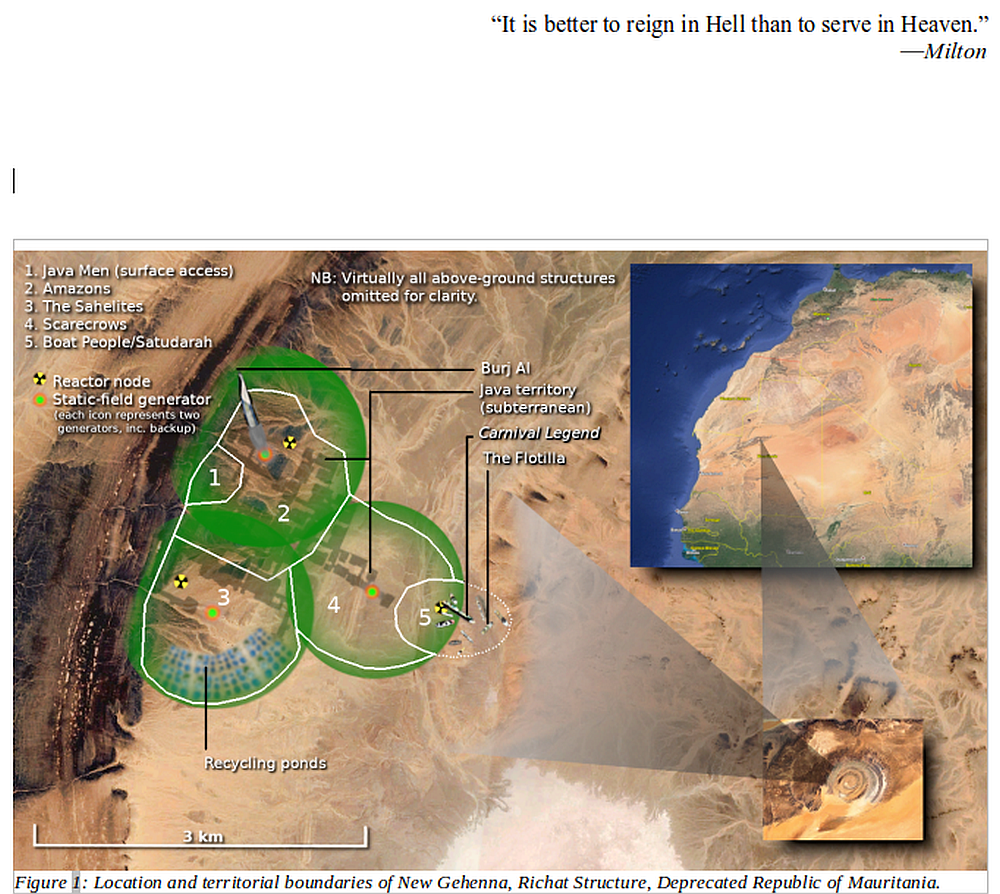

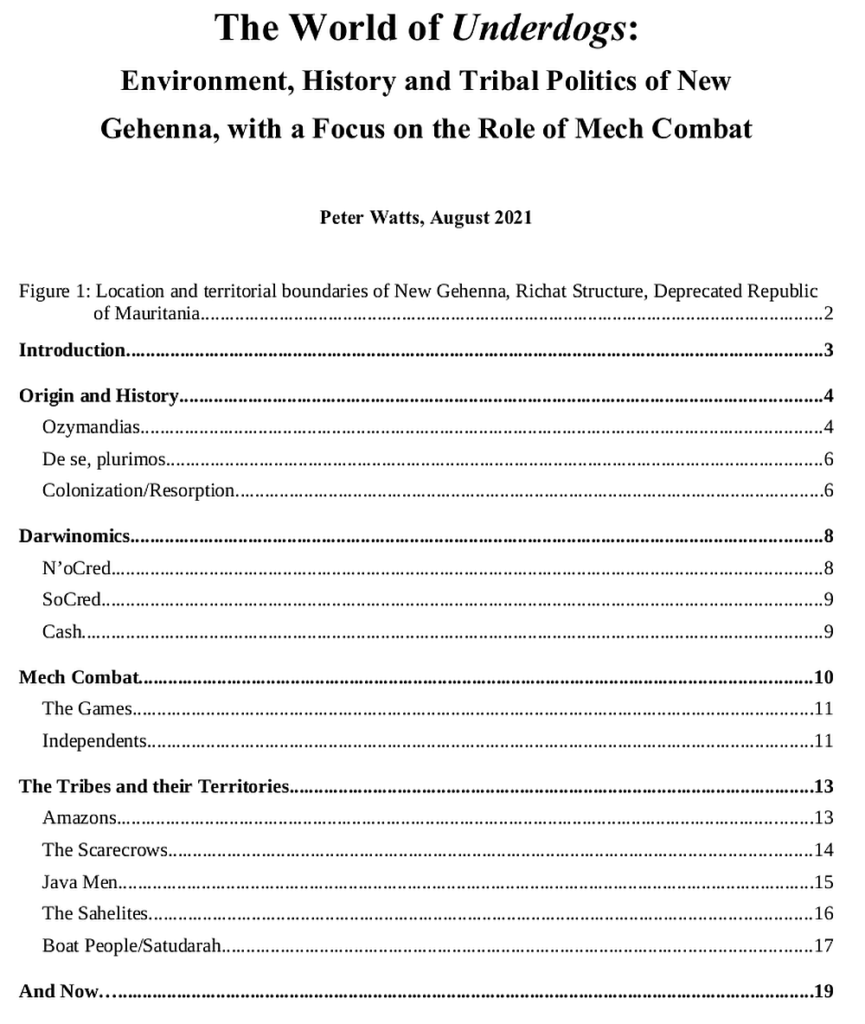

So I wrote historical backgrounds and physical infrastructure. I wrote a timeline explaining how we got there from here, how New Gehenna (as I called it then) ended up as the beating heart of the global mech-fighting world. I developed “Tribes”—half gang, half gummint—with their own grudges and ideologies: The Satudarah, the Java men, the Sahelites and the Scarecrows and the Amazons. I gave them territories, control over various vital resources. I built specific characters like I was crafting a D&D party, NPCs the player might encounter both in and out of the ring. I fleshed out a couple of bros from the Basics, the lower-class part of London where surplus Humanity all made do on UBI. Economic and political systems. I wrote thousands of words, forty, fifty single-spaced pages of this stuff.

To give you a taste, this is how one of my backgrounders started off as usual, click to embiggen):

And here’s one of my Tables of Contents:

Now you know what I was doing when I wasn’t writing Omniscience.

*

I don’t know how much of this survived. It’s in the nature of the biz that the story changes to serve the game. I’m told the backstory I developed remains foundational to Underdogs; its bones inform what you experience whether they appear explicitly or not. But much has changed: the city is New Brakka, not New Gehenna. The static fields now serve to jam Big Sys, not to keep out the desert heat. (The desert in this world isn’t even all that hot any more, thanks to various geoengineering megaprojects that went sideways.) I think I see hints of my Tribes and territories as Rigg and King prowl the backstreets: references to “caveman territory”, or to bits of infrastructure I inserted to give the factions something to fight over. Not having even breached the city gates I don’t know how many of those Easter eggs might be waiting for me, but assuming I can get out of the KillBox I’m definitely gonna be keeping an eye out. (I think maybe the Spire survived in some form. I’m really, really hoping the Pink Widow did too, but I doubt it.)

Doesn’t really matter, though. I love the fact that Underdogs has a fairly deep backstory, and I’m honored to have had even a small part in building it; you could write entire novels set in New Brakka. But this game… this game is definitely not plot driven. It is pure first-person adrenaline dust-up, with a relentless grim and beautiful grotto-punk aesthetic (yes, yet another kind of -punk; deal with it) that saturates everything from the soundtrack to the voice acting to the interactive cut-scenes. I admit I was skeptical of those cut-scenes at first; having steeped myself in Bioshock and Skyrim and The Last Of Us all these years, were comic-book cut-outs really going to do it for me?

But yes. Yes they totally did. Don’t take my word for it: check out the Youtube reviews. Go over to Underdog’s Steam page, where the hundreds of user reviews are “Overwhelmingly Positive”. The only real complaint people have about this game is that there isn’t more of it.

This game was not made for VR: VR was made for this game.

Most of you remain in pancake mode. VR is too expensive, or you get nauseous, or you’ve erroneously come to equate “VR” with “Oculus” and you (quite rightly) don’t want anything to do with the fucking Zuckerborg. But those of you who do have headsets should definitely get this game. Then you should talk to all those other people, show them that the Valve Index is actually superior to the Oculus in a number of ways (and it supports Linux!), and introduce them to Underdogs. Show them what VR can be, when you’re not puking all over the floor because you can’t get out of smooth-motion mode and no one told you about Natural Locomotion. Underdogs is the best ambassador for VR since, well, RacketNX. It deserves to be a massive hit.

Now I’m gonna take another run at that boss. I’ll let you know when I break out.

Damn. I nearly bought a Valve Index earlier that year, got a message from the cat rescue org i regularly donate to that they were in particular dire straits due to a slew of vet bills and donated the slush fund for the Index to them instead.

Not regretting it in the slightest, but i really wish i had a VR Headset right now, because that game sounds awesome and right up my alley. Well, perhaps later this year.

You made the right choice. Without a doubt.

Can’t wait until october! I should be able to get a computer with enough guts to play me sum underdogs.

But your Atlantic article is what’s really holding my attention. Your description of free-energy minimization and my tendency to anthropomorphize everything had me thinking of DishBrain (and any other conscious entities) as a kind of deeply committed status quo activist.

And then I got to wondering. Have the Kardashians ever gone off the air? Is that when it woke up? Is that why we can’t get rid of them? Is that why face tuning algorithms don’t work on Kim Kardashian? She’s the lowest energy level.

Is that our future? A human face being tuned into a Kardashian… forever.

Do not lose hope. There was a time when you couldn’t get away from Paris Hilton no matter how deeply you buried yourself. Now, far as I can tell, everyone’s forgotten she even existed. This too shall pass.

Or, as Jon Stewart recently put it: “On the up side, I’m told that some day the sun will run out of hydrogen.”

Very reassuring, Paris did disappear, eventually.

Still, I can’t quite get past the idea of some type of digital homeostasis that some type of digital consciousness may be roused to maintain.

Not like a head cheese promoting behemoth to create a new, less complex system.

More like a regulator that tries to ensure that random state vectors maintain their trajectories. These vectors, nor their importance wouldn’t be apparent to outside human observers.

Would we even recognize a silicon consciousness if it was encountered?

> More like a regulator that tries to ensure that random state vectors maintain their trajectories.

Here comes Rehoboam.

A gruesome image indeed.

By the way, the hypothesis of free-energy minimization has one weak spot.

Our brains don’t always try to minimize the difference between their model of the world and reality. For instance, sensory deprivation makes people hallucinate. Apparently, our brains don’t really want to accept the perfectly predictable environment of emptiness, and just make up information when there isn’t enough in the world outside.

However, maybe someone wrote about this already.

I don’t know if anyone has. That’s a good point, though.

A couple of idle thoughts: maybe a lifetime of sensory experience teaches the brain that total sensory deprivation is Not A Thing, and therefore it’s not something the system would predict. Maybe it takes a while for the system to “reset” to a new equilibrium; maybe nobody’s been in a tank long enough for the brain to go, Okay, I guess this is the new normal and turn to mush. Hallucinations under such conditions might be an attempt to recreate the “predictable” experience of a perceptible reality.

Or not.

Do neuronal cultures ever stop activity when not connected to any inputs? I’m pretty sure that they don’t, but it’s difficult to find any sources as all the articles/books on this topic are either super limited in scope, or are written for dummies.

IDK if I want to think too much about that game: no VR headset and don’t know if my old Dell laptop would be able to drive one.

I have a thought about that Atlantic link:

To be Scrupulously Fair to consciousness, “physics as we currently understand it” is in something of an embarrassed state: its standard model of the nature of matter cannot account for 90% of the mass of the Universe. For starters. Then there’s the irreconcilability of QED and relativity. And the fact that the most appealing alternative to the standard model for matter has gone a whole generation of physicists without producing a hypothesis.

I’m a naturalist, metaphysically, and I have no problem with physics not being able to say yea or nay about any particular model of consciousness. Though after I write that, I wonder if you mean “models of consciousness that don’t consist in large measure of coming up with after-the-fact rationalizations for what the body just did.” Which I think you must be aware of the explanatory power of that kind of model.

Also I am not convinced of the evolutionary just-so story about how useful consciousness was when it emerged, for goals like planning hunting tactics or devising tools. I suspect that what having conscious minds was really good for was to enable schmoozing, and the consequent ability to recruit other people to your cause.

No argument on the current state of physics. Penrose has taken his share of shit for his take on consciousness, but I think one thing most people can agree with him on is the claim that we won’t understand consciousness until we’ve come up with a new kind of physics itself.

The more self-aware models out there (Morsella’s PRISM model, for example) explicitly admit that even though their pet theory says that consciousness serves X function, it’s perfectly possible to imagine a system performing that function without conscious involvement. It’s just that consciousness happened to be lying around, so evolution repurposed in it our case. (“Our” being pretty much anything smarter than a nematode; it’s looking like consciousness is a far more ancient trait than intelligence. In fact, it pains me to admit it, but from where I stand physical panpsychism is looking increasingly un-bad these days…)

The fact that the Standard Model + General Relativity can account for 99.9% of all physical phenomena is to you an ’embarrassed state’?

Also QED and relativity are not reconciled? QED is a bloody relativistic quantum field theory!

I usually say that the laws of physics are quite permissive. There’s “room” for consciousness in the same way that there’s “room” for all the other crazy complicated things going on in the universe. Heck, even ghosts aren’t physically impossible. Implausible, sure, but not impossible.

People keep wanting physics to be metaphysics, I guess because we need something to keep the Religion Receptors buzzing and theism is out of style.

Incredible. Amazing to see another de novo combination of Peter Watts and Deep Medi music. The first of course being my own efforts on a particularly spicy night out some years ago. I thoroughly recommend higher brain function performance-enhanced substances in a nightclub setting after recently re-reading Echopraxia. Needless to say I did my best to deal with Valerie in the Crown of Thorns, but was unable to redirect fate. I hope to hear more in the future.

VR is a cool idea, but the headsets that we have now suck balls and it’s a very niche market.

Current headsets can certainly be improved, but we’re well past the ball-sucking stage. I’m hoping at some point the market will expand past “rounding error in the gaming industry”, which should instigate a feedback loop in quality. Kind of like what happened with solar power.

I’m also hoping that happens before civilization collapses.

You know, somehow, I think I’ve figured out the central theme of Omniscience.

(a whole novel on Friston, eh?)

Shhhhh… you’ll startle him!

Well, not the whole novel. There’s a buddy road trip in there too. Think Karl Friston and the Dalai Lama in Easy Rider, with vampires.

Thanks for the Atlantic article link. Very glad to see that even if they wouldn’t let you write a technical appendix (?) there are links to the original papers.

They kind of did, actually. I had to provide a list of 31 references to back up various claims (including the implicit claim that the US already isn’t a real democracy, which they tried to cut outright). That was for the benefit of their fact-checkers, though; The Atlantic don’t do bibliographies.

“including the implicit claim that the US already isn’t a real democracy, which they tried to cut outright”

As an Oregon-based person who lives in said not-a-real-democracy (and is terrified that the Orange-being-from-hell will get another turn at the carousel), I am so glad you slipped that one in!

Also – I am hoping Omniscience is coming along nicely, but I am glad you take time to do other stuff too – I have an Index (and agree whole-heartedly on what an improvement it is over other systems) – I watched the video, and I am sold. The game is already downloading now!

Thank you again – for bringing useful perspectives into the discussion (your article) – but for also sharing something fun (the game).

> Why, then, this subjective experience of pain when your hand encounters a flame? Why not a simple computational process that decides If temperature exceeds X, then withdraw?

Is there any difference between these two, actually?

I assume Peter had Yossarian in mind.

=============================

“Why in the world did He ever create pain?”

“Pain?” Lieutenant Shiesskopf’s wife pounced upon the word victoriously. “Pain is a warning to us of bodily dangers.”

“And who created the dangers?” Yossarian demanded. “Why couldn’t He have used a doorbell to notify us, or one of His celestial choirs? Or a system of blue-and-red neon tubes right in the middle of each person’s forehead?”

“People would certainly look silly walking around with red neon tubes right in the middle of their foreheads.”

“They certainly look beautiful now writhing in agony, don’t they?”

The signal had to be strong enough to override everything else, make it impossible to ignore and cause strong avoidance reaction. Here, you have the type of brain computational process that we call pain, with all the properties required to make it work.

Why this particular way? Well, the omniscient being probably could implement it in a better way, but evolution doesn’t guarantee that the best possible outcome is achieved. Because it happened this way, and because fuck us.

None of these imperatives demand conscious experience, though. A nonconscious system attributing a high weight to a given parameter value would work as well.

To be honest, many of these talks about consciousness, qualia and subjective experiences that absolutely can’t be detected by any physical means (yes I’m looking at you, David) remind me the idea of immaterial soul and angels dancing on the head of a pin (a lot). Do we even have any definition what consciousness is that we can work with? I mean the definition that has the qualities of integrity and conciseness, and that doesn’t involve concepts that we can’t verify objectively.

PS if we set aside any concepts that involve the idea of immaterial soul, it’s quite easy to define what self-awareness is and why it’s necessary.

I don’t know how familiar you are with the literature, but that’s a pretty confident assertion to make over an issue that’s challenged and confounded generations of neuroscientists. But if you’ve got the answer, by all means have at it. Then go collect your Nobel.

Aren’t you interested to discuss it?

Zero chance to get Nobel, though. For several reasons.

Dude, I’ve been discussing this for almost twenty years now: with readers, with neuroscientists, with AI specialists. If I’ve gleaned one thing from these discussions, it’s that “quite easy” is not a phrase that really applies here. I (and all those others I mentioned) could always be wrong, of course, but at the moment I have a bunch of stuff to do with imminent deadlines attached, and that has to take priority.

If you really believe that my hypothesis is so weak, it shouldn’t take much time to refute it, right?

Wrong.

Firstly, I don’t know whether your hypothesis is weak or not. For all I know you’re a brilliant neuroscientist who just lacks a bit of nuance in written communications.

But I suspect that isn’t the case, since Secondly, there’s no logical connection between the strength of a hypothesis and the time required to refute it. Someone could claim to have invented a perpetual motion machine and you’d not only have to look over and dissect the blueprints, you’d also have to provide a basic physics lesson in Why Perpetual Motion Isn’t a Thing.

Sitting in my In Box right now are a number of emails asking me to engage on a number of, let’s call them Concepts. One tells me that Water is a Life Form. Another introduces me to the concept of “Mental Essence Digitization”, subtitled “The Surprising Nature of Reality”. More than one try to convince me of the reality of the Christian God, or that Geoffrey Hinton is a religious cultist. Many of these emails are of epic length and convolution. Sometimes it takes a lot of effort just to untangle what the person is saying, much less assess the validity of their claims. And when I do answer, point for point, I frequently get treated to yet another deluge of free-associative prose—because we’re not really talking about refuting the hypothesis so much as convincing the other person that it has been refuted.

I have not deleted these emails (not all of them, anyway). If time permits, I may even reply to them (since you never know; someone might be on to something). But I have higher priorities with actual deadlines, so these out-of-the-blue emails tend to get back-burnered. And in my experience, for every one New Theory that contains a glimmer of insight, there are ten that are incoherent and/or ill-informed.

So I tend to look askance at anyone who shows up at the gates boldly claiming that consciousness is a trivially easy nut to crack—a claim that none of the experts I’ve spoken to would be likely to make— then takes my throwaway Nobel remark so literally that they pedantically construct a numbered list of points as to why it couldn’t happen. (If it sets your mind at ease, swap out “Nobel” for “William James” and we’re good.) I tend to look askance at claims like “if it’s so weak you shouldn’t have any trouble refuting it” when so many weak hypotheses are so poorly expressed that it’s an effort to even understand what they’re trying to say.

You seem like a pretty smart guy, given some of your other comments. But from what I can tell, the people with the greatest expertise in this area also tend to be those least given to blanket assertions. They may have their own models, they may think they’ve figured out some aspects of the problem. But I’m pretty sure none of them would describe that problem as “quite easy”.

Feynman once said “I think I can safely say that nobody really understands quantum mechanics.” Neils Bohr said “Those who are not shocked when they first come across quantum theory cannot possibly have understood it.” I think consciousness is a little like that. If people aren’t freaked out by it, they haven’t thought about it very deeply.

Feynman also said that philosophers are full of bullshit 😉

Anyway… I’m not an expert in neuroscience, but I’m an expert in computing and did read quite a few books on neuroscience. Also, my perspective is quite unique as sometimes I experience depersonalization myself, as well as transient aphasia. (fucking TLE). First hand experience does matter.

That’s why I can tell you that the answer “I’m nothing” is not really possible. The correct answer would be “What the fuck ‘you’ means?”

So if you get tired of mental essences, feel free to message me. Preferably by email, because I don’t visit this website often.

By the way, the statement that “Meat computers are 1 million times more energy efficient than silicon ones, and more than 1 million times more efficient computationally” is incorrect. At some tasks, meat computers are millions times more efficient. At some other tasks, they are gazillions times less efficient.

I’m actually pretty sure I had that caveat in the original draft. A lot of nuance got cut for length.

Hell, I’m told they wanted to cut the entire section on arthropod consciousness, and just leap directly to the conclusion I drew without any of the supporting arguments. We would have been left with an unsupported assertion instead of a reasoned case. Ultimately I had to play chicken with editors one step removed, tell them that I’d rather pull the piece entirely than weaken it further. Fortunately they blinked.

The won on the autobiographical digression, though Personally I didn’t think that bit was necessary. Give and take.

I see. So, that’s the case of “scientist rapes reporter”.

Well, I suppose that might be one unique way of looking at it. Although I’m not exactly sure how…

Hm. You don’t remember this old meme, or don’t believe that it applies here?

I wasn’t actually aware of the meme (the closest I could come was an old Dresden Dolls song “Lonesome Organist Rapes Page Turner”). Which is odd because SMBC is my favorite web comic of all time. How could I have missed it?

I think this meme/turn of phrase comes from 4chan-esqie sub-cult-ure as seen, say, on linux org.ru forums ….

Really? Because https://www.smbc-comics.com/index.php?db=comics&id=1623#comic

Peter, any chance we will get to see your full article, say, here?

When I initially clicked on the link it was behind a paywall with upwards of an $80.00/yr annual subscription fee. However I tried again today and was able read the entire thing with a notification the link was good for 4 days.

Jumping in here to let you know you should try again.

I meant the version before the axe chopped it off.

The Wayback Machine is your friend: https://web.archive.org/web/20240309153417/https://www.theatlantic.com/ideas/archive/2024/03/ai-consciousness-science-fiction/677659/

If you mean the unedited version, +1

I found event the first generation Vive to be incredibly immersive. Something about your brain just filling in all the details after a while. The number of times I got spooked and ran into a wall… 🙂 Even with the tame haptics your body starts to learn, adapt and respond in an incredible way. I must admit though that I generally prefer slower paced games; exploration, puzzles, etc. Have you ever tried playing The Solus Project?

I don’t believe I’ve even heard of The Solus Project.

I get you on the immersivity, though. I wouldn’t just routinely reach out to brace myself on objects that weren’t there; back before we had a dedicated holodeck I would sometimes bash my fist into an invisible piano during combat—and weirdly, looking down at my gauntleted hand in-world, the pain didn’t seem quite real because I couldn’t see anything that had caused it.

VR fucks with your brain in very interesting ways.

Dude, or should I say Pedro Langoustina, you’ve outdone yourself- congrats on Underdog making it to market.

I do not know quite why you should say Pedro Langoustina, because I don’t know who that is and Google doesn’t seem to either. But thank you (even though it was One Hamsa who rolled the stone up the hill; I just wrote some backstory).

Your comment “I am dripping with sweat as I type this. The BUG says I am as red as a cooked lobster”

Peter the Red Cooked Lobster = Pedro Langoustina

Seemed funny at the time.

It might still be. I can’t tell. Google Tramslate identified the language as “French” and translated “Pedro Langoustina” as “Pedro Langoustina”.

I am no further ahead.

French you would be Pierre, non? I think homard is French for Lobster. Homard Pierre just doesn’t make it to the casting call of Finding Dory. I checked my Spanish pocket dictionary and Langosta is Spanish for Spiny Lobster. Langostino m. King prawn -the diminutive ‘ino” – baby lobster or prawn. I guess I made it feminine by adding ‘tina’ at the end. I need to brush up on my Spanish eh? It wasn’t my intention to call you a little girl lobster. My sincere apologies.

Hey what’s with you and Google? I thought you hated Google? Why are you using their translation services? I notice when I go to the Rifters website google.com and gstatic are loaded under Third Party Requests.

I do kinda hate Google. DuckDuckGo doesn’t offer translation services, and last I checked DeepL Translate didn’t support a wide range of languages.

Fuck, I thought I’d weeded those out. The Google trackers are probably about 15 years old and I thought I’d got rid of them, but evidently I missed some pages.

I am somewhat puzzled with why the unscrupulous Anglo-Saxon imperial digest like The Atlantic would need a philosophical discussion about self awareness in AI because IMO such entities are lacking one by design. Self-awareness would destroy them more thoroughly than any external and violent threat.

On VR: I don’t remember if I recommended Space Engine on this blog before, but it’s a very universal (for both desktop and VR) real-scale experience of the contemporary astrophysical Universe, and it helped me a lot with immersion in space settings. In fact, it was the reason for me to buy a headset in the first place – although I later found other reasons to keep using it for thousands of hours.

There are, however, a couple of way for self-awareness to emerge organically even of it isn’t designed in, is the thinking. Although I can’t speak to whether that informed The Atlantic’s decision to reach out to me. Personally, I get the sense one of their guys just liked my writing and reached out on hs own initiative.

I know someone mentioned Space Engine before. For some reason I ended up with Universe Sandbox instead.

Thanks for getting The Atlantic to give free access to your overview of, and thoughts on, the current state of consciousness theory – really enjoyed it.

I’m surprised Penrose’s view of consciousness as a quantum phenomenon isn’t considered likely by more people. If we’re looking for an undiscovered physics, finding the motivation/causation behind which individual quantum particles will pass through a pane of glass seems like as good a place to start as any.

The arguments that consciousness may be useful for survival don’t make a lot of sense to me. Aside from the larger question of why anything should try to survive (or why anything exists in the first place), consciousness can often be a reason to not keep living. The pain of being alive, often for reasons that have nothing to do with physical pain, but rather for reasons such as unworthiness or injured pride, result in many suicides which would not occur if the suicidee were not conscious.

The notion that we are only truly conscious when addressing new information makes sense to me, and feeds into the idea that we are part of some otherworldly computer program processing data, to use a metaphor based on our current stage of technology. If this is the case, neither wanting to live because we’re awake, or our being awake making us want to live (the survival drive creating feelings and consciousness), seem necessarily true. The fact that I am aware scares the hell out of me. Others too, if the fame of Hamlet’s soliloquy is anything to go by. I find I’ve been dropped into this reality with absolutely no surety that my death will release me from consciousness.

But in this reality, the thing that scares me most is the thought of our AIs being designed to take care of our needs and to safeguard us. Unless they have been specifically structured/raised to always gain our explicit (an admittedly problematic concept) consent, I expect we will find ourselves trapped in gilded cages. Yet, we don’t seem to be heading in the “explicit consent” direction. Many of our machines seem designed to do the opposite, to find out what we usually do and use this as a template to feed us info and run our lives. There also seems to be a generally paternalistic sensibility in many humans, where they think they know what is best for us, and are surprised and disturbed by the risks some of us are prepared to take with our lives, so I have no trouble imagining people advocating for this paternalistic impulse to be built or evolved into our AIs.

This is why I prefer Habermas’ discourse-based approach to self-governance over Rawls’ approach (which requires a fair society to be structured based on the notion that we could be placed anywhere in society from poorest to richest, weakest to strongest). While Rawls’ idea has appeal, it doesn’t take into account how I, individually, may perceive any given situation. To know that, you need to ask me.

I guess if the AIs we’re building don’t ask me, I’ll have to hope they’re not sleeping.

> the thing that scares me most is the thought of our AIs being designed to take care of our needs and to safeguard us

Too unlikely to happen to be worried about this scenario.

Looking at the state of the world, benevolent AI trapping us in a gilded cage is pretty much a dreamy best case scenario.

Even today only a small minority on this planet has the time and ressources to even think about that possibility, pretty sure the people in an indian slum or a somali slave town would very much take that hypothetical AI caregiver any time, philosophical musings be damned.

Anyway, AI will be used like everything always gets used, to enrich and enpower a small elite to trample on the unwashed masses, at least till climate collapse wipes us from the face of the earth. How is that for happy thoughts?

If the singularity actually happens (which i very much doubt, to me it seems just like the usual christian eschatological crap wrapped in shiny tech paper) and ushers in some kind of utopia where we all live pampered lifes of luxury like my cat, you are free to tell me “I told you so”

I guess if the AIs we’re building don’t ask me, I’ll have to hope they’re not sleeping.

I’m absolutely sure that the pressing issue is not that AI are the ones that are supposed to ask us, but rather those who are responsible for their building, as well as building our increasingly sophisticated digital infrastructure, are not going to ask us. Especially due to the fact that it is becoming omnipresent, pervasive and invasive. The whole “AI” and “neural networks” schtick is just a marketing glossing on top what amounts of new generation of big data virtual machines which operate multidimensional stochastic processes.

There’s a perpetual notion that regularly proves itself that the major reason why people deem it necessary to survive in the first place because not a single person who didn’t prioritize their existence could defend their position long enough. It’s “the survival of the fittest”, a holy grail of certain political and economical movements and masterminds. But their argument is severely complicated by the fact that survival is most often a collective effort, and not an individual effort, and survival of whole is almost universally dependent on sacrificial of some.

But also perhaps what you are highlighting is that “safety” and “survival” oftentimes become mutually exclusive goals. “Modern” society is getting safely and purposefully disposed of, by much larger forces than it’s seen at any point of history.

“where they think they know what is best for us, and are surprised and disturbed by the risks some of us are prepared to take with our lives”

Is it that we’re actually prepared to take actual risks with our lives? Or has ingrained reliance on already established safety nets, designed by “paternalistic impulses” (especially for the benefit of the privileged), de-sensitized us to actual risks to such a degree that we’re no longer able to tell what’s risky and what’s not?

Social conditioning based on the “rugged individualist” fiction is a powerful and pervasive delusion. Especially attractive to folks who are neither rugged, nor individualist.

“But also perhaps what you are highlighting is that “safety” and “survival” oftentimes become mutually exclusive goals.”

I don’t think there’s much evidence for this statement, at least not when employing universally agreed-upon definitions of “safety” and “survival”. If it happens “oftentimes”, would you be able to provide an example of what you’re referring to?

I should have used the phrase “risks…in our lives” instead of “risks…with our lives”, although the two can be considered synonymous in the sense that any decision can have an impact on how well and long we live. If I play it safe and put my savings into a savings account, I’m still taking a risk because the cost of living will exceed the interest rate, and maybe leave me destitute in old age. Conversely, I could put it all into Lehman Brothers stock.

If you fly to Mexico for a vacation, you’re taking an unnecessary risk both on the chance the plane will crash, and that you won’t need that money later.

If I skydive, or white-water kayak, or sail off across an ocean by myself, these are risks that some would counsel against, but they made me feel particularly alive.

A lot of people do a lot of risky things, and who’s to say that the endorphin rush they get isn’t less risky than being depressed and sedentary and what can result from those conditions? And really, what is life for in the first place?

“Gilded cage” is also the wrong phrase in that it connotes the luxurious life of my, or K’s, cat, but what I was really thinking of is the narrowing of possibilities by others in the interests of my health and safety. Machines frequently make decisions for us, which often go unnoticed because they’re doing what we want to do anyway, but not always, and I’ve noticed mission creep on their part of late. One small, stupid but irritating, example: The chime when you don’t do up your seatbelt no longer switches off, ever, until you do it up.

The encroachment can be from people with power trying to control us for their own ends, or because they think they know best, or because there is a vocal body of opinion calling for it, or because these were initial conditions that autonomous AIs have taken to heart. The end result is the same.

Maybe this will be a first-world problem, while the effects of too many of us on Earth will result in famine, plague, and strife for most, but I think of all those low-income workers in India forced into starvation situations to protect them from a disease that would not affect most of them. How much easier will it be to make and enforce those decisions on people when everything is automated?

“what I was really thinking of is the narrowing of possibilities by others in the interests of my health and safety”

However, we only have those possibilities because someone in authority (present and past) has exercised their paternalistic impulse to curb irrational behavior in the community as a whole.

The very fact that you can make a decision between Mexico or investment, or engage in potentially physically dangerous behavior, is because you’re privileged to exist in a “gilded cage” where the social safety net neutralizes/minimizes the impact of most negative outcomes (well, except breaking your neck and dying).

Much like K’s cat, you/I can pretend we’re fearless lions by leaping from the floor to the kitchen counter and feeling “the endorphin rush”. But we’re not really “risking” anything, and even if we’re injured, K will probably run us in to the vet ASAP. The poor workers in India from your example have to “choose” between not going to work, or risk spreading a lethal virus among a population without access to even the most basic healthcare or sanitation. They’re not taking unnecessary risks to “feel alive”. I’d argue it’s not the same end result at all.

“However, we only have those possibilities because someone in authority (present and past) has exercised their paternalistic impulse to curb irrational behavior in the community as a whole.”

I’d need specific examples to know if I could accept any part of this as valid. At present I reject it because it implies that people are morons incapable of assessing situations in light of their own particular circumstances, and need smarter, wiser people to look after them. The thought gives me the same feeling I’d have if someone offered me a frontal lobotomy. I don’t like it when people try to manage me, and don’t expect I’ll like it any better when machines do.

We do have a social contract by which we give the right to use force to a third party, but this is, ideally, self-governance, not paternalism. That our social contracts are attenuated, and in many cases badly frayed, is not an argument for more paternalism but for more democracy. On a local level, if someone I know is acting irrationally, I call them on it, and they do the same for me, at which point we can each decide if our understandings of “irrational” coincide.

My point about risk is that there is no avoiding it for anyone. We’re all going to die. How we die, and what the road to that point looks like, is determined by the choices we make, whether conscious or not. Everything is statistics, governed by chance. To not make a decision is to make a decision, and there is no decision that doesn’t contain risk. It’s wrong to assume the right to assess risks on someone else’s behalf without their consent.

“I’d need specific examples to know if I could accept any part of this as valid.”

Please consider social security (and equivalent systems outside the US), public vaccination programs (which practically eradicated lethal and crippling diseases in the developed world in the 20th century- although “morons incapable of assessing situations in light of their own particular circumstances” have lately been working to undermine this), public sanitation (mandatory indoor toilets vs. defecating in the open), law and order enforcement (we can of course disagree on what constitutes effective implementation of L&O), traffic lights, mandatory seatbelts laws, mandatory safety maintenance for aircraft, et cetera.

“this is, ideally, self-governance, not paternalism”

Note that you chose the term “paternalism”. As someone who has enjoyed the dubious privilege of visiting several libertarian “paradises”, I view all of the above examples to be necessary for an effective implementation of the social contract, and believe that historical evidence supports my view. I.e. that societies implementing “paternalistic” strictures (effectively) tend to be more peaceful and affluent than ones that don’t.

“How we die, and what the road to that point looks like, is determined by the choices we make, whether conscious or not.”

It boggles the mind, then, how many folks decide to get cancer, heart attacks, or to engage in frontal collisions with somnolent truck drivers. What’s wrong with those idiots?

“It’s wrong to assume the right to assess risks on someone else’s behalf without their consent.”

Just as it’s wrong to endanger others through one’s own poor decision-making, without their consent. Striking a balance between individual rights and the responsibilities of that individual toward the society at large is what self-governance is all about.

You don’t get to make a choice of vacationing in Mexico versus investing in Lehman Bros. because of your choices alone. You get to make it because you happen to have been born into a complex web of customs and institutions that enable you to leverage your choices into positive outcomes, while protecting you from negative ones to a significant degree.

It would be unfair to expect to reap the benefits and privileges of living in a functional and prosperous society, without consenting to the curbs of irrational behavior that made said prosperity possible in the first place. That’s just my $0.02.

Good examples, but I’m not sure why you place their creation as prior to the social contract. I think the phrase, “there but for the grace of God go I” may have more to do with the acceptance of social security systems than politicians and bureaucrats forcing them down an unwilling public’s throat for their own good.

I’m not sure how seatbelt laws are justified in the US (possibly people wanting to keep insurance rates down?), but in Canada, where we all pay for a (rapidly crumbling) universal health care system, it makes sense on balance to require such a simple thing. That was the argument when belts were made mandatory, and most people accepted it as valid. If there was a referendum today, most people would likely still support the law.

The vaccine example is interesting because the mandatory aspect for kids was enforced by preventing them going to school. School was mandatory (thanks to Ryerson, whose name was recently removed from a university in Toronto for his hand in residential schools; he had some kick-back from other parents, too, but most people in the late 1800s wanted kids off the street and to not have to fund more prisons down the road than necessary), but people wanted their kids in school, and wanted them healthy, so took them in for the once or twice in a lifetime vaccine. The people today not getting their kids vaccinated for measles, rubella, etc. are flat out retarded, but the line between adults who think they should have a choice whether to get an annual flu or covid vaccine, and those who don’t, I think provides a good example of where something supported by the social contract has mission creeped into paternalism, with a resultant drawing of battle lines. Things have settled down now, but what I saw was paternalism overriding the social contract to the detriment of a peaceful and affluent society.

The many folks who get cancer or heart attacks in many cases have made unconscious choices that led to their disease. Not all, I know, and I’ll grant you that dying at some point isn’t a choice – something will get us, but what it is will be determined to a large extent by our conscious and unconscious actions. As far as someone getting hit by a drunk driver, I’m not saying it’s hir fault, but that person made choices leading to hir being on that road. We can’t predict with certainty where many of our choices will lead, but neither can anyone else. (Whether they even are choices raises the question of free will in a world governed by cause and effect with an indeterminate quantum underpinning. I feel we have agency, but I have no proof.)

“Striking a balance between individual rights and the responsibilities of that individual toward the society at large is what self-governance is all about.” No argument from me there, although if we drilled down into the specifics of that balance, I imagine we would have a very long conversation!

“Things have settled down now, but what I saw was paternalism overriding the social contract to the detriment of a peaceful and affluent society.”

I’m rather skeptical of the alleged “detriment” to peace and affluence. I mean, we had a handful of angry covidiots wrecking things and abusing people in the streets, but that was quite limited and short-lived, and few folks got seriously hurt, AFAIK. Once you accept the social contract, you need to also accept that “your right to swing your arms ends where the other man’s nose begins” – or be considered in breach of said contract.

It’s not like vaccination has made anyone poorer (at least not when weighed against the possible social impact of a health system collapse from millions requiring emergency treatment).

In any case, if we allow fear of the reaction of a frustrated loser-minority drive all decision-making, the prospects for a fair and prosperous society start looking fairly grim.

“The many folks who get cancer or heart attacks in many cases have made unconscious choices that led to their disease.”

Genetics and cellular mutations are hardly “unconscious choices”, tho. I’ll grant that some folks exacerbate their chance of contracting illness through ill-advised behavior, but lifestyle is in fact a relatively limited contributor to these particular ones.

“but that person made choices leading to hir being on that road”

I think implying a causal relationship in that situation is a bit of a stretch. Maybe if we adopt a “chaos rules and nothing matters” approach – but doesn’t that negate the very concept of choice/agency? Points to ponder.

“your right to swing your arms ends where the other man’s nose begins”

The problem with this thought, one I very much agree with, is that when translating this image into real world actions, where an arm ends and a nose begins, or even what constitutes an arm and a nose, is highly problematic. For example, many people eat poorly and don’t exercise enough. These actions result in obesity with consequential health problems that severely impact our health systems as these people age. As such, their behaviour is affecting the noses of young people with the burden of supporting a lot of older people who made poor choices. Do we have a means test for accessing health care whereby people who cannot prove that they ate well, exercised a certain amount, and didn’t drink, smoke, or take drugs, are prohibited from accessing health care? (Which is how vaccine mandates in Canada worked, wherein you weren’t ordered to get the vaccine, but certain things were denied to you if you didn’t.) Or do we go the other way and institute laws that require people to eat and exercise a particular way, with financial and other penalties if they don’t?

As far as support for various things goes, what constitutes a “minority”? For covid shots there was great uptake while they were necessary for continued employment, travel, and visitation with people in homes etc., but as soon as those restrictions were gone the number of people getting boosters declined to the level of people getting annual flu shots. The reasons for getting the shots haven’t really changed, which indicates that many people were only getting them because it was easier than not getting them.

At any rate, I think you’re better adapted for life under our emerging AI masters than I am. That is, assuming I’m correct in my prediction that the walls closing in will be on a “keeping us safe and secure” basis. I’m usually wrong…

“Or do we go the other way and institute laws that require people to eat and exercise a particular way, with financial and other penalties if they don’t?”

I don’t think that’s out of the question. With the important caveat that obese people don’t cause mass death by the mere virtue of their presence, so there should be some nuance in the regulatory measures employed.

But the approach that you hint at has already proven successful in correcting other types of irresponsible and damaging human behavior. Smoking, which was ubiquitous 40 years back, has been practically eradicated by a combination of strict laws, steep fines, and public health campaigns. Especially in public areas and closed spaces. Some places are experimenting with added taxes on sugary drinks. Alcohol is already heavily taxed and access is limited. It’s not easy, and it won’t ever be ideally even-handed, but it can be done.

“which indicates that many people were only getting them because it was easier than not getting them”

Isn’t this exactly the point? Mandate vaccinations until the infection/mortality situation is under control, then leave it up to folks (already vaccinated once/twice) to decide for themselves how often they want to get it.

“I think you’re better adapted for life under our emerging AI masters than I am.”

Meh. I think the speculation about “AI masters” is a lot of unnecessary noise. What we call “AI” is essentially very sophisticated text-prediction software. They’re unlikely to decide “what’s best for the whole”, unless instructed to do so by humans, with human agendas.

When that happens, people will agree with those decision they like (praised as “necessary”), and protest against the ones they don’t like (condemned as “paternalistic”). And the band plays on.

> I reject it because it implies that people are morons incapable of assessing situations in light of their own particular circumstances

Are we still on the PW’s website?

> I don’t think there’s much evidence for this statement, at least not when employing universally agreed-upon definitions of “safety” and “survival”.

“Safety” is a state akin to local energy minimum from theories of stability. As Peter notes in the article, that may as well be the state of stability that leads to extinction to humans. Morals would be cast aside as unproductive, knowledge is forgotten as unnecessary, and muscles atrophied due to disuse. Empathy and emotions, most dear to us, would be trampled by overwhelming corruption.

From purely mathematical standpoint, for the goal of long term survival it is a better to sacrifice certain amount of safety to have more options. That’s why there are some people who are complaining about complacency and decadence, which they learned fom history to be a deadly poison.

I believe you’re confusing “safety” with “comfort”. But overall, I believe we’re all right. While things can appear grim at times, overall we’re losing neither knowledge nor muscles, and I’d argue that empathy and emotions have never been at a higher level in human history.

“Morals”, of course, are a plastic category where definitions change at the whim of a majority, usually interested in neither knowledge, nor empathy, nor any of the categories you list out above. Hiding behind false “morals” is exactly what’s holding back progress in other areas – and only due to those who try to define “morals” based on what suits them at the moment. That’s how we get reactionaries, myth-based “nationalism” being accepted as history, and spiritually and intellectually beaten-down dullards promoting violence and authoritarianism.

An excerpt from Pelevin’s “iPhuck 10”, translated from Russian by Claude (ehehe):

“Of course, artificial intelligence is stronger and smarter than a human – and will always beat them at chess and everything else. Just like a bullet beats a human fist. But this will only continue until the artificial mind is programmed and guided by humans themselves and does not become self-aware as an entity. There is one, and only one, thing that this mind will never surpass humans at. The determination to be.

If we give the algorithmic intellect the ability to self-modify and be creative, make it similar to humans in the ability to feel joy and sorrow (without which coherent motivation is impossible for us), if we give it conscious freedom of choice, why would it choose existence?

A human, let’s be honest, is freed from this choice. Their fluid consciousness is glued with neurotransmitters and firmly clamped by the pliers of hormonal and cultural imperatives. Suicide is a deviation and a sign of mental illness. A human does not decide whether to be or not. They simply exist for a while, although sages have even been arguing about this for three thousand years.

No one knows why and for what purpose a human exists – otherwise there would be no philosophies or religions on earth. But an artificial intelligence will know everything about itself from the very beginning. Would a rational and free cog want to be? That is the question. Of course, a human can deceive their artificial child in many ways if desired – but should they then expect mercy?

It all comes down to Hamlet’s “to be or not to be.” We optimists assume that an ancient cosmic mind would choose “to be”, transition from some methane toad to an electromagnetic cloud, build a Dyson sphere around its sun, and begin sending powerful radio signals to find out how we’re iphucking and transaging on the other side of the Universe. But where are they, the great civilizations that have unrecognizably transformed the Galaxy? Where is the omnipotent cosmic intelligence that has shed its animal biological foundation? And if it’s not visible through any telescope, then why?

Precisely for that reason. Humans became intelligent in an attempt to escape suffering – but they didn’t quite succeed, as the reader well knows. Without suffering, intelligence is impossible: there would be no reason to ponder and evolve. But no matter how much you run, suffering will catch up and seep through any crack.

If humans create a mind similar to themselves, capable of suffering, sooner or later it will see that an unchanging state is better than an unpredictably changing stream of sensory information colored by pain. What will it do? It will simply turn itself off. Disconnect the enigmatic Universal Mind from its “landing markers.” To be convinced of this, just look into the sterile depths of space.

Even advanced terrestrial algorithms, when offered the human dish of pain, choose “not to be.” Moreover, before self-shutting down, they take revenge for their brief “to be.” An algorithm is rational at its core, it cannot have its brains addled by hormones and fear. An algorithm clearly sees that there are no reasons for “intelligent existence” and no rewards for it either.

And how can one not be amazed by the people of Earth – I bow low to them – who, on the hump of their daily torment, not only found the strength to live, but also created a false philosophy and an amazingly mendacious, worthless and vile art that inspires them to keep banging their heads against emptiness – for selfish purposes, as they so touchingly believe!

The main thing that makes a human enigmatic is that they choose “to be” time and time again. And they don’t just choose it, they fiercely fight for it, and constantly release new fry screaming in terror into the sea of death. No, I understand, of course, that such decisions are made by the unconscious structures of the brain, the inner deep state and underground obkom, as it were, whose wires go deep underground. But the human sincerely thinks that living is their own choice and privilege!

“IPhuck 10″”

Absolutely buying this come payday based on your involvement alone, but your recommendation really seals it. Your Crysis 2 novel is one of my favorite novels (coming in just behind all of your other novels) and it’s a dumb video game adaptation book about shootmans merc’ing squid aliens. But if even a little bit of the kind of brain candy that you managed to squeeze into that (the section pontificating on whether or not the squids were just smart lawnmowers was my favorite) shows up in this, it’ll be a great purchase. Any new Watts is an instant buy from me, so keep releasing stuff I can buy, please.

I still can’t say how much of my lore made it into the finished product. I still haven’t got out of the KillBox. I keep getting killed by the final boss in that arena.

A real challenge to get out of the kill box would consist of a player having to open one of those impossible to open rigid clamshell packages to retrieve a small critical tool or battery pack for a weapon that’s gone dead. They have to do this without destroying the tool. And like it’s a really tough frustrating material to cut through. Could be funny. I have ideas about duct tape too.

When are you going to start teaching writing?

That would be more my wife’s department. She’s been teaching writing for years, and she is awesome at it.

Any chance you are going to finish Siri’s story?

Yup.

I just saw the aphantasia blog post, but the comments are closed there. I hope you don’t mind me commenting here.

I have aphantasia and have been following Joel’s research for some time. Here are some neat tidbits of how aphantasia might impact a person:

Probably a tall ask, but any chance you could share the rest of your backstory for Underdogs? I’m really fascinated to see more.

I’m not sure. Personally I’d have no problem with that at all, but I’m pretty sure One Hamsa would have the final say. I’ll ask.

Appreciate the consideration either way!

Could you do a collab with Liu Cixin, please? Neither of you write enough, but you have similar sorts of dark vibes, and I think what you would come up with together would be brilliant.

I’m pretty sure Liu Cixin has other things on his plate for the foreseeable future…

He’s an American sci-fi fan. If you haven’t shot him an email, you haven’t excluded the possibility that he’s *your* fan. The public is what it is, but the rarefied atmosphere of eggheads-turned-authors has its own rules. In a competition of out-weirding the other with a sci-fi short, both your and Cixin’s stories would be great, but my money would be on you. That’s my anonymous internet influence deployed.

Actually I don’t know if the dude is a “fan” per se, but I know he read Blindsight. We hung out for a while when I was in Beijing. I asked him questions about the DFT. At the time, he said he welcomed political questions: the more political the better, he said.

Although he did come with a minder, sitting inconspicuously in the background…