Extraordinary Claims

I’ve had a fondness for Daryl Bem ever since his coauthored paper appeared in Psychological Bulletin back in 1994: a meta-analysis purporting to show replicable evidence for psionic phenomena. I cited it in Starfish, when I was looking for some way to justify the rudimentary telepathy my rifters experienced in impoverished environments. Bem and Honorton gave me hope that nothing was so crazy-ass that you couldn’t find a peer-reviewed paper to justify it if you looked hard enough.

Not incidentally, it also gave me hope that psi might actually exist. There’s a whole shitload of things I’d love to believe in if only there was evidence to support them, but can’t because I fancy myself an empiricist. But if there were evidence for telepathy? Precognition? Telekinesis? Wouldn’t that be awesome? And Bem was no fruitcake: the man was (and is) a highly-regarded social scientist (setting aside the oxymoron for the moment) out of Cornell, and at the top of his field. The man had cred.

It didn’t really pan out, though. There were grumbles and rebuttals about standardisation between studies and whether the use of stats was appropriate — the usual complaints that surface whenever analysis goes meta. What most stuck in my mind back then was the point (mentioned in the Discussion) that these results, whatever you thought of them, were at least as solid as those used to justify the release of new drugs to the consumer market. I liked that. It set things in perspective (although in hindsight, it probably said more about the abysmal state of Pharma regulation than it did about the likelihood of Carrie White massacreing her fellow graduates at the high school prom).

Anyhow, Bem is back, and has made a much bigger splash this time around: nine experiments to be published in the Journal of Personality and Social Psychology, eight of which are purported to show statistically-significant evidence of not just psi but of actual precognition. The New York Times picked it up; everyone from Time to the Huffington Post sat up and took notice. Most of the mainstream reaction has been predictable and pretty much useless: Time misreads Bem’s description of how he controlled for certain artefacts as some kind of confession that those artefacts weren’t controlled for; the Winnipeg Free Press simply cites the study as one of several examples in an extended harrumph about the decline of peer-reviewed science. Probably the most substantive critiques hail from Wagenmakers et al (in a piece slotted to appear in the same issue as Bem’s) and an online piece from James Alcock over at the Skeptical Inquirer website (which has erupted into a kind of three way slap-fight with Bem and one of his supporters). And while I by no means dismiss all of the counter-arguments, even some of the more erudite skeptics’ claims seem a bit suspect — one might even use the word dishonest — if you’ve actually read the source material.

I’m not going into exquisite detail on any of this; click on the sources if you want details. But in general terms, I like what Bem set out to do. He took classic, well-established psychological tests and simply ran them backwards. For example, our memory of specific objects tends to be stronger if we have to interact with them somehow. If someone shows you a bunch of pictures and then asks you to, say, classify some of them by color, you’ll remember the ones you classified more readily than the others if presented with the whole set at some later point (the technical term is priming). So, Bem reasoned, suppose you’re tested against those picture before you’re actually asked to interact with them? If you preferentially react to the ones you haven’t yet interacted with but will at some point in the future, you’ve established a kind of backwards flow of information. Of course, once you know what your subjects have chosen there’s always the temptation to do something that would self-fulfil the prophecy, so to speak; but you get around that by cutting humans out of the loop entirely, let software and random number generators decide which pictures will be primed.

I leave the specific protocols of each experiment as an exercise for those who want to follow the links, but the overall approach was straightforward. Take a well-established cause-effect test; run it backwards; if your pre-priming hit rate is significantly greater than what you’d get from random chance, call it a win. Bem also posited that sex and death would be powerful motivators from an evolutionary point of view. There weren’t that many casinos or stock markets on the Pleistocene savannah, but knowing (however subconsciously) that something was going to try and eat you ten minutes down the road — or knowing that a potential mate lay in your immediate future — well, that would pretty obviously confer an evolutionary advantage over the nonpsychics in the crowd. So Bem used pictures both scary and erotic, hoping to increase the odds of significant results.

Note also that his thousand-or-so participants didn’t actually know up front what they were doing. There was no explicit ESP challenge here, no cards with stars or wavy lines. All that these people knew was that they were supposed to guess which side of a masked computer screen held a picture. They weren’t told what that picture was.

When that picture was neutral, their choices were purely random. When it was pornographic or scary, though, they tended to guess right more often than not. It wasn’t a big effect; we’re talking a hit rate of maybe 53% instead of the expected 50%. But according to the stats, the effect was real in eight out of nine experiments.

Now, of course, everyone and their dog is piling on to kick holes in the study. That’s cool; that’s what we do, that’s how it works. Perhaps the most telling critique is the only one that really matters; nobody has been able to replicate Bem’s results yet. That speaks a lot louder than some of the criticisms that have been leveled against Bem in recent days, at least partly because some of those criticisms seem, well, pretty dumb. (Bem himself responds to some of Alcock’s complaints here).

Let’s do a quick drive-by on a few of the methodological accusations folks have been making: Bem’s methodology wasn’t consistent. Bem log-transformed his data; oooh, maybe he did it because untransformed data didn’t give him the results he wanted. Bem ran multiple tests without correcting for the fact that the more often you run tests on a data set, the greater the chance of getting significant results through random chance. To name but a few.

Maybe my background in field biology makes me more forgiving of such stuff, but I don’t consider tweaking one’s methods especially egregious when it’s done to adapt to new findings. For example, Bem discovered that men weren’t as responsive as women to the level of eroticism in his initial porn selections (which, as a male, I totally buy; those Harlequin Romance covers don’t do it for me at all). So he ramped the imagery for male participants up from R to XXX. I suppose he could have continued to use nonstimulating imagery even after realising that it didn’t work, just as a fisheries biologist might continue to use the same net even after discovering that its mesh was too large to catch the species she was studying. In both cases the methodology would remain “consistent”. It would also be a complete waste of resources.

Bem also got some grief for using tests of statistical significance (i.e., what are the odds that these results are due to random chance?) rather than Bayesian methods (i.e., given that our hypothesis is true, what are the odds of getting these specific results?). (Carey gives a nice comparative thumbnail of the two approaches.) I suspect this complaint could be legit. The problem I have with Bayes is that it takes your own preconceptions as a starting point: you get to choose up front the odds that psi is real, and the odds that it is not. If the data run counter to those odds, the theorem adjusts them to be more consistent with those findings on the next iteration; but obviously, if your starting assumption is that there’s a 99.9999999999% chance that precognition is bullshit, it’s gonna take a lot more data to swing those numbers than if you start from a bullshit-probability of only 80%. Wagenmakers et al tie this in to Laplace’s famous statement that “extraordinary claims require extraordinary proof” (to which we shall return at the end of this post), but another way of phrasing that is “the more extreme the prejudice, the tougher it is to shake”. And Bayes, by definition, uses prejudice as its launch pad.

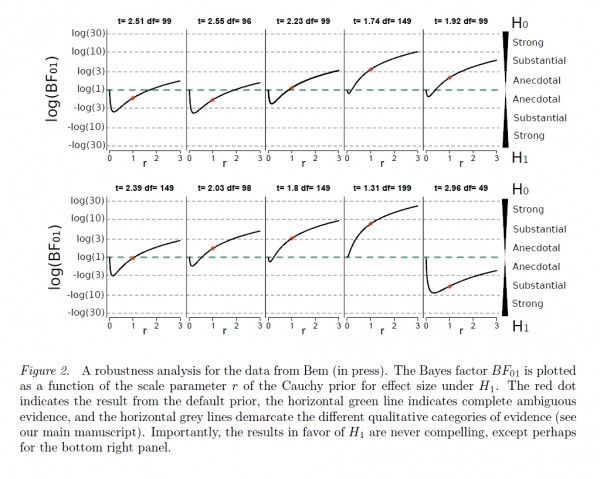

Wagenmakers et al ran Bem’s numbers using Bayesian techniques, starting with standard “default” values for their initial probabilities (they didn’t actually say what those values were, although they cited a source). They found “substantial” support for precognition (H1) in only one of Bem’s nine experiments, and “substantial” support for its absence (H0) in another two (they actually claim three, but for some reason they seem to have run Bem’s sixth experiment twice). They then reran the same data using a range of start-up values that differed from these “defaults”, just to be sure, and concluded that their results were robust. They refer the reader to an online appendix for the details of that analysis. This is what you’ll find there:

Notice the figure caption: “… the results in favor of H1 are never compelling, except perhaps for the bottom right panel.” Except perhaps? I’m sorry, but that last panel looks pretty damn substantial to me, if for no other reason than that so much of the curve falls into the evidentiary range that the axis itself labels as, er, substantial. In other words, even assuming that these guys were right on the money with all of their criticisms, even assuming that they’ve successfully demolished eight of Bem’s nine claims to significance — they’re admitting to evidence for the existence of precognition by their own reckoning. And yet, they can’t bring themselves to admit it, even in a caption belied by its own figure.

To some extent, it was Bem’s decision to make his work replication-friendly that put this particular bullseye on his chest. He chose methods that were well-known and firmly established in the research community; he explicitly rejected arcane statistics in favor of simple ones that other social scientists would be comfortable with. (“It might actually be more logical from a Bayesian perspective to believe that some unknown flaw or artifact is hiding in the weeds of a complex experimental procedure or an unfamiliar statistical analysis than to believe that genuine psi has been demonstrated,” he writes. “As a consequence, simplicity and familiarity become essential tools of persuasion.”) Foreseeing that some might question the distributional assumptions underlying t-tests, he log-transformed his data to normalise it prior to analysis; this inspired Wagenmakers et al to wonder darkly “what the results were for the untransformed RTs—results that were not reported”. Bem also ran the data through nonparametric tests that made no distributional assumptions at all; Alcock then complained about unexplained, redundant tests that added nothing to the analysis (despite the fact that Bem had explicitly described his rationale), and about the use of multiple tests that didn’t correct for the increased odds of false positives.

This latter point is true in the general but not in the particular. Every grad student knows that desperate sinking feeling that sets in when their data show no apparent patterns at all, and the temptation to inflict endless tests and transforms in the hope that please God something might show up. But Bem already had significant results; he used alternative analyses in case those results were somehow artefactual, and he kept getting significance no matter which way he came at the problem. Where I come from, it’s generally considered a good sign when different approaches converge on the same result.

Bem also considered the possibility that there might be some kind of bias in algorithms used by the computer to randomise its selection of pictures; he therefore replicated his experiments using different random-number generators. He showed all his notes, all the messy bits that generally don’t get presented when you want to show off your work in a peer-reviewed journal. He not only met the standards of rigor in his field: he surpassed them, and four reviewers (while not necessarily able to believe his findings) couldn’t find any methodological or analytical flaws sufficient to keep the work from publication.

Even Bem’s opponents admit to this. Wagenmakers et al explicitly state:

“Bem played by the implicit rules that guide academic publishing—in fact, Bem presented many more studies than would usually be required.”

They can’t logically attack Bem’s work without attacking the entire field of psychology. So that’s what they do:

“… our assessment suggests that something is deeply wrong with the way experimental psychologists design their studies and report their statistical results. It is a disturbing thought that many experimental findings, proudly and confidently reported in the literature as real, might in fact be based on statistical tests that are explorative and biased (see also Ioannidis, 2005). We hope the Bem article will become a signpost for change, a writing on the wall: psychologists must change the way they analyze their data.”

And you know, maybe they’re right. We biologists have always looked at those soft-headed new-agers over in the Humanities building with almost as much contempt as the physicists and chemists looked at us, back before we owned the whole genetic-engineering thing. I’m perfectly copacetic with the premise that psychology is broken. But if the field is really in such disrepair, why is it that none of those myriad less-rigorous papers acted as a wake-up call? Why snooze through so many decades of hack analysis only to pick on a paper which, by your own admission, is better than most?

Well, do you suppose anyone would be eviscerating Bem’s methodology with quite so much enthusiasm if he’d concluded that there was no evidence for precognition? Here’s a hint: Alcock’s critique painstakingly picks at every one of Bem’s experiments except for #7. Perhaps that seventh experiment finally got it right, you think. Perhaps Alcock gave that one a pass because Bem’s methodology was, for once, airtight? Let’s let Alcock speak for himself:

“The hit rate was not reported to be significant in this experiment. The reader is therefore spared my deliberations.”

Evidently bad methodology isn’t worth criticising, just so long as you agree with the results.

This leads nicely into what is perhaps the most basic objection to Bem’s work, a more widespread and gut-level response that both underlies and transcends the methodological attacks: sheer, eye-popping incredulity. This is bullshit. This has to be bullshit. This doesn’t make any goddamned sense.

It mustn’t be. Therefore it isn’t.

Of course, nobody phrases it that baldly. They’re more likely to claim that “there’s no mechanism in physics which could explain these results.” Wagenmakers et al went so far as to claim that Bem’s effect can’t be real because nobody is bankrupting the world’s casinos with their psychic powers, which is logically equivalent to saying that protective carapaces can’t be advantageous because lobsters aren’t bulletproof. As for the whacked-out argument that there’s no theoretical mechanism in place to describe the data, I can’t think of a more effective way of grinding science to a halt than to reject any data that don’t fit our current models of reality. If everyone thought that way, earth would still be a flat disk at the center of a crystal universe.

Some people deal with their incredulity better than others. (One of the paper’s reviewers opined that they found the results “ridiculous”, but recommended publication anyway because they couldn’t find fault with the methodology or the analysis.) Others take refuge in the mantra that “extraordinary claims require extraordinary evidence”.

I’ve always thought that was a pretty good mantra. If someone told me that my friend had gotten drunk and run his car into a telephone pole I might evince skepticism out of loyalty to my friend, but a photo of the accident scene would probably convince me. People get drunk, after all (especially my friends); accidents happen. But if the same source told me that a flying saucer had used a tractor beam to force my friend’s car off the road, a photo wouldn’t come close to doing the job. I’d just reach for the Photoshop manual to figure out how the image had been faked. Extraordinary claims require extraordinary evidence.

The question, here in the second decade of the 22nd Century, is: what constitutes an “extraordinary claim”? A hundred years ago it would have been extraordinary to claim that a cat could be simultaneously dead and alive; fifty years ago it would have been extraordinary to claim that life existed above the boiling point of water, kilometers deep in the earth’s crust. Twenty years ago it was extraordinary to suggest that the universe was not only expanding but accelerating. Today, physics concedes the theoretical possibility of time travel (in fact, I’ve been led to believe that the whole arrow-of-time thing has always been problematic to the physicists; most of their equations work both ways, with no need for a unidirectional time flow).

Yes, I know. I’m skating dangerously close to the same defensive hysteria every new-age nutjob invokes when confronted with skepticism over the Healing Power of Petunias; yeah, well, a thousand years ago everybody thought the world was flat, too. The difference is that those nutjobs make their arguments in lieu of any actual evidence whatsoever in support of their claims, and the rejoinder of skeptics everywhere has always been “Show us the data. There are agreed-upon standards of evidence. Show us numbers, P-values, something that can pass peer review in a primary journal by respectable researchers with established reputations. These are the standards you must meet.”

How often have we heard this? How often have we pointed out that the UFO cranks and the Ghost Brigade never manage to get published in the peer-reviewed literature? How often have we pointed out that their so-called “evidence” isn’t up to our standards?

Well, Bem cleared that bar. And the response of some has been to raise it. All along we’ve been demanding that the fringe adhere to the same standards the rest of us do, and finally the fringe has met that challenge. And now we’re saying they should be held to a different standard, a higher standard, because they are making an extraordinary claim.

This whole thing makes me deeply uncomfortable. It’s not that I believe the case for precognition has been made; it hasn’t. Barring independent confirmation of Bem’s results, I remain a skeptic. Nor am I especially outraged by the nature of the critiques, although I do think some of them edge up against outright dishonesty. I’m on public record as a guy who regards science as a knock-down-drag-out between competing biases, personal more often than not. (On the other hand, if I’d tried my best to demolish evidence of precognition and still ended up with “substantial” support in one case out of nine, I wouldn’t be sweeping it under the rug with phrases like “never compelling” and “except possibly” — I’d be saying “Holy shit, the dude really overstated his case but there may be something to this anyway…”)

I am, however, starting to have second thoughts about Laplace’s Principle. I’m starting to wonder if it’s especially wise to demand higher evidentiary standards for any claim we happen to find especially counterintuitive this week. A consistently-applied 0.05 significance threshold may be arbitrary, but at least it’s independent of the vagaries of community standards. The moment you start talking about extraordinary claims you have to define what qualifies as one, and the best definition I can come up with is: any claim which is inconsistent with our present understanding of the way things work. The inevitable implication of that statement is that today’s worldview is always the right one; we’ve already got a definitive lock on reality, and anything that suggests otherwise is especially suspect.

Which, you’ll forgive me for saying so, seems like a pretty extraordinary claim in its own right.

Maybe we could call it the Galileo Corollory.

I could not resist noticing your punny typo:

asks you to, say, classify some of them by color, you’ll remember the ones you classified more redily than the others

Arrrgh.

Fixed. Probably a million others. After three days I’m so sick of researching and writing about this I just dumped the whole damn wadge without a proper proof-read. Thanks.

It would have been clever if I’d meant to spell it that way, though, wouldn’t it?

How much of science starts with, “I noticed this weird thing”?

I don’t think extraordinary evidence means what they’re claiming: research that comes up with unexpected results must meet the same standards as every other published paper, but then should be followed up with further research.

There is no escape clause for “I don’t like what you are saying, so you must use p=0.001 as your standard of significance even though research I like gets to use p=0.05.”

Instead, the response should be “That doesn’t make sense to me, but it was conducted and presented with rigor appropriate to the field, so I’m going to try to replicate it.”

Something that pushes the boundaries should spur more research, not incite criticism beyond what an unremarkable paper would incur.

*explodes with joy*

The critique of “he should have used Bayesian statistics instead of Frequentist tests for significance” is utter hogwash. The split between Bayesians and Frequentists is a deep rooted ideological difference on how to interpret probabilities and how to do statistics and goes beyond just a methodological choice. I’m biased towards the Frequentist position myself, because our collaborator in Statistics is a Frequentist (we do Molecular Phylogenetics), and we have similar deep rooted arguments about whether Bayesian or Maximum-Likelihood phylogenetics is more appropriate. At least in my field I am often troubled by Bayesian methods because of issues like inadequate mixing of MCMC samplers and what to do in terms of assigning prior probabilities.

As for what priors they chose in the Bayesian analysis, the usual thing to do, if you have no information, is to simply assign equal probabilities with the priors, giving precog versus non-precog a 50/50 split. Not sure if that is what they actually did but absent a specific reason for not doing so it would seem to be the way to do it.

First, why you need to correct for multiple comparisons: Neural correlates of interspecies perspective taking in the post-mortem Atlantic Salmon.

Also, Andrew Gelman (poly-sci stats guru) has a couple of good discussions on this paper here and here. Note that the first one explicitly mocks the “Bayesian” analysis you linked to, which is singificant since Gelman wrote one of the major books on the subject.

One important point is the overestimation of small effects. Say you have a question that should have a 50/50 probability of being answered “correctly”. If you ask 500 people, it’s statistically significant in the classic definition if >270 or <230 answer correctly. If the true probability of a correct answer is 50%, this gives a ~2.2% chance of finding a postive ESP effect and a ~2.2% chance of a negative ESP effect.

However, say that you actually suspect that, due to ESP, the chance of answering correctly is really 51%. Now, you have a ~7.0% chance of (correctly) finding a positive ESP effect (though you, by necessity, will find an effect of 8%+), but you also still have a ~0.9% chance of finding a negative ESP effect. So, not only are you only 3 times more likely to find the effect than you would be if it didn't exist, but if it does exist and you find a statistically significant result, you still have a 11% chance of finding an effect in the wrong direction! And this is just with a simple binomial test – these problems tend to get worse with more complicated data.

Unfortunately, correcting for multiple comparisons won't help that problem. And, unless you replicate with much greater sample sizes, failing to replicate the results doesn't actually say much about the validity of the earlier results.

This is that second link from Andrew Gelman:

http://www.stat.columbia.edu/~cook/movabletype/archives/2011/01/one_more_time_o.html

Or, trying to linkify it once more:

http://www.stat.columbia.edu/~cook/movabletype/archives/2011/01/one_more_time_o.html

[…] This post was mentioned on Twitter by torforgeauthors and Bodil Stokke, Benedict Leigh. Benedict Leigh said: Extraordinary Claims http://bit.ly/gIGdCy […]

If you search for something unlikely long enough, you will eventually find it just by pure chance. So, if no one is able to replicate the results of this experiment (in the next few years) I would be inclined to not believe it. So… the jury’s still out on this one. We’ll see…

Extraordinary claims require extraordinary evidence” isn’t so subjective when you relate it to the idea of the “universal prior”.

http://www.scholarpedia.org/article/Algorithmic_probability

Add one bit to the shortest program implementing your guessed laws of physics, halve the likelihood that (in the pure stab in the dark scenario) your guess is correct.

How many bits would have to be added to add support for psi? It might be simpler to take evidence of psi as evidence we are all living in some alien’s universe simulator.

Laplace’s principle is about the total amount of evidence needed to be convinced. Your qualms, however, sound like they are about the standards being used to judge if a study counts as evidence at all. Whether Bem’s results count as evidence for precognition and whether they are convincing are two different issues.

The same standards should apply to every study, whether extraordinary or not. Results supporting ordinary claims still need to be checked. Unless some clear issue about the computer algorithm is raised, I think Bem succeeded in producing evidence for precognition. Even if the results are never replicated, we should consider precognition is a little more likely. Laplace comes into play by saying it will take more than this to convince us precognition actually exists.

“The question, here in the second decade of the 22nd Century, is: ”

It’s 2011, not 2111.

With regard to figure 2 from the appendix to Wagenmakers et.al. paper, their description seems reasonable enough. Disclosure: I’m a Bayesian.

The left hand side is the log of the Bayes factor – roughly the log of how much you should increase your perceived probability of the hypothesis being true given your prior information. It’s not a nonsense that this differs based on the prior – If my prior beliefs make the observed data very unlikely then observing that data can shift my beliefs far further.

The important point is that the bayes factor, even in the bottom right, is less than 10. Trying to crowbar (technically inappropriate) frequentist notation in, then even with a sympathetic prior there’s only a p = 0.1 result, at best. (This isn’t right because p-values and BF’s aren’t really comparable without reference to a prior, but it’s the closest approximation I can get without being excessively technical.)

“Of course, nobody phrases it that baldly.”

Should probably be “boldly”?

This just shows that the scientific process, in spite of its flaws, works pretty well, and also demonstrates that the author knows the limitations of the process. As you mentioned, the author included a level of detail about his protocol and analysis that is almost never seen in peer reviewed papers. This is probably because if he had limited the information in the paper to the level that is commonly found, the natural biases of the reviewers would have resulted in a recommendation to reject. But when these same reviewers were provided with the details, they recommended publication even though they probably disagree with the conclusions.

The ultimate example of extraordinary claims requiring extraordinary proofs was the Origin of Species… At the time that natural selection was proposed, it was little more than a thought experiment supported by a ridiculous number of observations. After all, the underpinning mechanism, DNA, was still well in the future. But because it was so well explained, and because the observations were so well documented (and repeatable), most arguments against it have been based on emotion and religion, not evidence.

And before anyone raises the point, I am not trying to equate Bem’s contribution to science with Darwin’s. Only that they both realized that the only way their theories stand/stood a chance of being accepted is/was to provide extraordinary evidence.

@ Ausir: “bald” can be used to mean “having no hair,” but also, “directly, without covering or ornamentation,” as in a “bald statement of fact.”

There can also be an implication of being slightly shocking in the lack of care in not “covering” the fact being stated with polite or indirect language.

Another common usage, “He’s bald-faced liar!’ It means the man didn’t just lie, he didn’t even bother to obscure himself with a beard, that he lies without shame or fear of being recognized.

“bold-faced liar!” would be an eggcorn. i just wanted to pop in to leave a comment about eggcorns in case anyone hadn’t heard of them. it is a fun distraction to go look them up. and ‘eggcorn’ was included in the OED recently. how cool is that?

hee. So is bold-faced liar the eggcorn or is bald-faced?

It would be perfectly reasonable that bald-faced is, for instance, a southern-us eggcorn for bold-faced, and I was unaware.

And really funny. I remember fondly at school asking someone if they wanted a schnitt, not realizing that it didn’t mean “slice of apple” in the wider world.

Video of Peter reading at Chizine from Crysis Legion is up:

http://www.specfic-colloquium.com/apps/videos/videos/show/12167021-peter-watts-reads-at-the-chiaroscuro-reading-series-11-01-2011

“Bald faced” goes back to the 18th century or so. “Bold Faced” only came up with the rise of home word-processing software.

I want to know where I sign up to look at porn for science. I love science.

David S., the studies I’ve read about where porn is used for science are the ones where they measure responses to different types of porn and show that men respond to a very specific range of stimulus whereas women respond to almost anything, including scenes of bonobos mating.

I’ve sometimes taken that to mean that males have sexual orientation in a way that is different from females. Bisexual men are unicorns? But anyway, recently there was a scuffle in the science blogging world about “just so” stories and the evolutionary psychology slant on these results that females response to almost anything is an evolutionary strategy meant to protect against rape.

oh, and there was an MRI of a couple having intercourse, thought would it be considered science, exactly?

Dear Peter!

I believe you and/or the commenters are being overly unkind to Pharma. The only place where status of evidence is approximately as shaky as status of evidence in these, quite entertaining papers on “psi” are the ones that deal with comparative efficacy and/or “subtle” novel effects (think the second-gen antipsychotics controversy).

Having said that, I personally think that kind mister Bem is an ingenious IRL troll.

He has found out that the “social sciences” (lol) community is particularly prone to publishing, and consuming, moderately hard sci-fi as “scientific literature” (it should be noted that the field of psychology specifically does have a tendency for accumulating prodigious amounts of crap, and, in fact, many of the modern “schools” of this field rest upon unfalsifiable constructs)

And, upon growing old and famous, upon reaching the part of a life when man no longer needs to care for his reputation or money or anything like that and can afford to do stuff for sheer fun, Bem decided to milk this finding for what it’s worth in lulz by engineering a typical socSci bollocks-paper, but in a way that the “bollocks” part would stick out without becoming easily attacked via criticism methods typical of “social sciences”.

He engineered a very subtle, very fine troll article (or more like, he did that twice already)

The people in socSci realize that (maybe not consciously so, but they get a “can’t be right” vibe allright), but can’t do anything about it, because a solid chunk of “social sciences” is made up by this kind of meticulously engineered, non-reproducible bollocks and they can’t condemn our beloved troll without condemning themselves and/or their upstanding peers (but dear Cthulhu, do they try 🙂 )

P.S.:

Peter, do you remember the Blindsight Vampire evolution and raperoduction thread on this very blog?

I think you should try to publish a paper about the evolution of human behavior that would unsubtly imply the past existence of (thinly disguised) Blampires in some “evolutionary psychology” journal 😀

Evopsych crowd would slurp that up as corn syrup.

Don’t know about social sciences in general, but psychologists are actually more skeptical of this stuff that other scientists, according to Bem (and his cited source): “A survey of 1,100 college professors in the United States found that psychologists were much more skeptical about the existence of psi than their colleagues in the natural sciences, the other social sciences, or the humanities (Wagner & Monnet, 1979). In fact, 34% of the psychologists in the sample declared psi to be impossible, a view expressed by only 2% of all other respondents.”

Possibly for the same reason that most science fiction writers are UFO skeptics; defensive distancing from something that seems to hit a bit too close to home.

P.S. Actually, it has been suggested that Bem might have written this whole thing as a joke on the community at large; it’s the only way some folks can reconcile his overall cred with this aspect of his research career.

[…] No Moods, Ads or Cutesy Fucking Icons (Re-reloaded) » Extraordinary Claims – Dust off your scientific hats for this one. Watts does an interesting analysis of the responses to a paper showing statistically significant evidence of precognition. Other folks apparently jumped all over the precog article with some borderline dishonest critiques, which Watts chews on. […]

Peter wrote:

This, so much this.

I’d go further and declare him a comedy genius.

do note that while at it, Magnificent Daryl also trolls the evopsych crowd.

I wonder how many people will he offend with his next paper…

Oh noes, blockquote no workey 🙁

Wish I knew statistics to actually make thoughtful criticism on the subject 🙁

Wow, you definitely make a good point… as a skeptic myself it worries me that the terminology “extraordinary claims” is so vague in relation to the extraordinary finds that are happening all the time in this day and age. But then again I don’t buy dark matter either… heh

That reminds me… when I was first reading this it made me think of the Jref million dollar prize…

I’ve often thought that one of the best places to look for evidence of any paranormal phenomena (‘magic’, psi, religious shenanigans, you name it) would be in animals exhibiting odd behavior or characteristics. It stands to reason that if such forces were active in the world then at some point evolution would have gotten a hold of them and had a heyday. Apart from random genetic drift (which, albeit, can put a stop to novel features pretty swiftly) I don’t think its too far a stretch to assume that a mutation that took advantage of principles in theoretical physics would become fixed in a population.

As a side note, I’ve always thought it would be funny to start printing textbooks with the word ‘magic’ replacing the word ‘energy’.

I never could get my head around potential magic.

Interesting that Peter would actually have some good points in defense of Bem’s paper…(I’m hovering around the idear that 53% isn’t that great an effect-it’d be like having a superpower-say, invulnerability, that only sorta works some of the time, and when it does, not as well as one might want.)..oh, and to date, it seems no one has noticed that Bem is a traditional acronym for-‘Bug Eyed Monster.’ Mind you, it’s a fairly old acroslang (uh, is that a new word?) but I found it amusing. On a trivia note, I never much liked the character Donna Troy in Star trek Next Generation-“I sense…anger.” “Yeah, his screaming and being red in the face might have been a clue.” To my mind, it works like this: hey, something like remote viewing might well be a reality-so what? We have spies and cameras for that sort of thing, and they’re way better at gathering data. Or the ability to stop a guy’s heart at a distance-yep, got stuff that can do that, too. I think it’s called chemicals or something…

Dear Peter: Your post has reinforced my thinking about several subjects, including precognition. 1. I once taught a philosophy of science course using a text based on Bayes. Since then I’ve had exacrly the same problem with prior probabilities that you have. Besides introducing biases I think this reflects various “anti-realist” views of science, in which the question of truth, or closeness to the truth, is a priori excluded by the philosopher. It’s a feeling that I cannot make more precise. 2. The question of the statistical respectability of psychology (or: specific types thereof) is important and should be addressed with force. It’s not. 3. Finally, a mathematician with a broad knowledge of physics told me that “Physics is up fot grabs.” I’ll take his word for it, especially now that I’ve read a lot about time-travel, quantum teleportation (yesterday) , and various ways of claiming that time does not exist. Given all this your open-end is justified. E.G. If a well-formulated claim that time either does not exist or has no preferred direction can be supported to a reasonable extent, then what can rule out Bem-results, for which the term *pre*-cognition would be a misnomer? Nothing, perhaps.

Brycemeister, “On a trivia note, I never much liked the character Donna Troy in Star trek Next Generation-”I sense…anger.” “Yeah, his screaming and being red in the face might have been a clue.” “

Trivial tangents SQUIRREL!

Yeah but not to say that someone couldn’t make a good character with that ability. Think if someone like Desjardins was an empath. Or a used car salesman. Best manipulator EFAR.

Great villains. someone who is a highly accurate empathy reader but has no empathy.

Or if you didn’t want a simple villain, then someone who is an immature empath who has empathy but has trouble not manipulating people due to it. borderline personality, perhaps.

anyway, you could set up all kinds of cognitive dissonances. much fun.

It does make me think that I haven’t really seen anything well done with the telepath trope. Although I do recall a movie featuring a guy who has an accident (I think? Been a long time since I’ve seen it) and wakes up with ultimate psychic powers-pretty much anything he wants to do, he can. And he goes hog wild with it, of course. Decent movie with a name I can’t remember.

And of course, what also bugged me was that Troy could read alien minds-so now she knows a zillion languages?

Offhand, there’s been a number of somewhat more substantial psychic tests where subjects are hooked up to a variety of instruments that read brainwaves, heart rate and a variety of physical responses, that do not depend on statistics, and apparently report some rather interesting findings in support of psychic phenomena. These get ignored, it seems. As I’ve stated, and to clarify-I have no problem whatsoever with the possibility and/or potential reality of psychic phenomena (although I do think it’s rare), I just tend to think as a phenomena of the mind, it suffers from an ambiguity or perhaps from being a bit of a relic ability. Y’know, like, being able to move a matchbox-not exactly useful under any circumstance. Or reading minds-mostly useful at a distance, and superceded by technology. And suffers from the problem of different languages.

That bottom right Bayes factor test is not compelling because of the false discovery rate expected from this statistic. Given Bem admits to exploratory analyses, his results here are dubious. He needs to admit to all of the statistics he tried and failed at before I believe anything he claims as “statistically significant”.

My point of view isn’t about whether psychic abilities exist-given some of the aspects of physics, I can see a certain amount of psi to be actually quiet possible. But if it does exist, it seems that the results, or effect, is a bit trivial. Wow, hey look-that guy can move a watch hand-wow! Or, if spoon bending exists-yeah, right, like that’s an awesome power. Not even useful in the kitchen.

It seems to me that the whole proving psi exists might be more of a means to upset the applecart-compel a major change in worldview. No problem with that, either. Science, as a system (it’s just one way of observing consensus reality, among many other ways of observing consensus reality or reality.). Problem is, why bother going at something that seems rather too vague, or, well, vague is the best term I can think of at the moment, when there are lot’s of other ways to compel changes in the way of thinking?

1. Bem was a guest on The Colbert Report on 27 Jan 2011! The video will probablty be up on the CR website for a while yet. If he has gone crackpot, it was not apparent from the interview.

2. Brycemeister: Agree that ability to move kitchen matches around, if it exists, is not super-useful. The importance, inho, is that it violates cause and effect, which means faulty experiment, fluke results, bad methodology, bad statistics, fraud OR we need to reconsider some current basic assumptions about how the universe works. This is really really exciting – every so often physics is revolutionized when the pile-up of little anomolies becomes too great to ignore. In fact, yes, who cares if some college student occasionally can tell when he is about to see porn, since the effect is really small. But. Information flowing both forward and backward in time in such a way that we can detect it, that is huge. Imho.

3. In ST mythos, there is an assumption of some universal mental constructs, such as sexual reproduction, etc. We know this because the universal translator works in near real-time. It can ID universal ideas and, working from there, approximate languages it has never previously encountered. Also consider that Mr. Spock is able, during mind-meld, to extract ideas and formulate English language equivalents during first-contact scenarios, and he is probably translating from his native tongue first. (Devil in the Dark: the Horta does the same, in fact. NO KILL I is a little ambiguous, but there is clearly a common idea field from which both can draw .) Also note that the Horta demonstrates certain emotions are universal, so even if you don’t have a limbic system, you can grieve for dead offspring.

Troy, as annoying as she is, doesn’t need to to know your language to get a sense of your feelings, whether you are lying, etc. She naturally does a limited form of what Vulcans do with training and physical contact.

http://www.forevergeek.com/2011/01/maximo-riera-octopus-chair/

“Given Bem admits to exploratory analyses, his results here are dubious. He needs to admit to all of the statistics he tried and failed at before I believe anything he claims as “statistically significant”.”

I thought this was the most compelling argument against Bem’s work here; exploratory analysis is, almost by definition, not amenable to the same statistical analysis that ordinary experiments are. The intuition is pretty clear:

Imagine you claim that you can affect the outcome of a coin flip. You start tossing coins and recording the ratio of heads to tails. Now, if you have a fair coins, this should approach 0.5 in the long run; the key is, long run. In a normal experiment, you would flip a given number of times, and then test to see if your ratio (say, 0.6) is statistically the same as the fair ratio. However, imagine that instead of doing a fixed number, you stop after each coin flip and then test to see if your ratio is different from the fair ratio. If it is, you stop; otherwise, you continue flipping.

Most people can immediately see that this would lead to a much higher false positive rate that the testing method used would under a normal experiment; essentially, what’s occuring here in statistical terms is that the trial size is not independent of the outcome (a classical requirement for most statistical techniques). Although I wouldn’t say that this is a slam-dunk knock-out for Bem’s work, given that he hasn’t actually admitted to this explicitly, but his experiment shows serious signs of being designed and run in a similar fashion.

However, my real point here is that this is SUPER common in most social sciences. The reason is not because they are bad dumb people who are stupid at doing science, but rather that:

1) Their disciplines typically have a much less formal background in experimental sciences. Typically, most data in social sciences comes from quasi-experiments where you’re already dealing with experiments that don’t fit your mold completely well, and so exploratory work like this (call it “peeking”) is actually useful in lots of contexts, and the errors it induces tend to be mild compared to the other options.

2) There’s typically a limited opportunity to study things, so often you will send yourself back to the lab to confirm or reject certain conclusions (chiefly in order to get published). Given the design of most experiments (i.e. multiple sessions, distributed across times), it’s not usually obvious where to draw the line between a normal experimental design and peeking is. If you are already running 9 months of experiments, does it really matter if you add another month? Or suppose you detect an error in your design early on; should you correct, or start over? Is the bias from the design flaw better or worse than the bias from fixing it mid-way through treatment. These are very real concerns, especially in path-breaking research like Bem’s.

Anyways, to bring this back to Peter’s point, maybe Bem garbled the statistics and his results aren’t signifigant. Unless we feel like being particularly bloody-minded and just abandoning exploratory science in areas outside of a medical lab, we should still laud him for the attempt. His exploratory work might be wrong, but it will provoke the kind of research that will definitively answer the question — which is, in my mind, kind of what science is supposed to do. I’m sure, with unlimited funding and time, Bem would have done it himself and published a paper with (or without) replication. However, that’s why we have a scientific community.

So, as many are fond of saying, it’s time for other social scientists to “shut up and calculate”; barring a major revelation about Bem’s methodology, they need to hit the lab. Frankly, even if there IS a major revelation, they should hit the lab anyways just to get some more evidence and answer this question. Given the amount of attention and column-inches devoted to this result already, I don’t think this is going to be problem.

Lot’s of food for thought. An idea I’m considering is that time is not static, in the way that we think of static. Think of time as a video game, only played in 3D-like the holodeck-only with more than forcefields and light, or whatever it is that they use, and then think of time as having both a static quality, and uh, something akin to ‘movement.’ That is, like a video game, scenes can be replayed. As well, one can play the game in many different ways, attain different levels, and, of course, design entire levels, like in Doom. Or environments as in Minecraft, only with the very stuff of space and time.

I don’t see this as being in any opposition to current models in quantum physics, and even allowing for flexibility-there’s no past, future, only a continual act of creation. One problem with this, is how and where the static quality comes in-admittedly a very big weak point…but anyways, I aint no physicist, and it would allow for a certain amount of psychic activity-and would allow for the existance, or non-existance of a mind, or even things somewhat like minds, only not quite as we know of them. Aggregates of plasmic information that use electromagnetic quailties as a storage/sensory device, and use something that isn’t binary as coding information, maybe trinary or uh-quadrinary? Dunno if’n I got that right. like, ‘yes, no, maybe and something that has quailties of the first three while being unique to them…